- Главная

- Разное

- Бизнес и предпринимательство

- Образование

- Развлечения

- Государство

- Спорт

- Графика

- Культурология

- Еда и кулинария

- Лингвистика

- Религиоведение

- Черчение

- Физкультура

- ИЗО

- Психология

- Социология

- Английский язык

- Астрономия

- Алгебра

- Биология

- География

- Геометрия

- Детские презентации

- Информатика

- История

- Литература

- Маркетинг

- Математика

- Медицина

- Менеджмент

- Музыка

- МХК

- Немецкий язык

- ОБЖ

- Обществознание

- Окружающий мир

- Педагогика

- Русский язык

- Технология

- Физика

- Философия

- Химия

- Шаблоны, картинки для презентаций

- Экология

- Экономика

- Юриспруденция

Что такое findslide.org?

FindSlide.org - это сайт презентаций, докладов, шаблонов в формате PowerPoint.

Обратная связь

Email: Нажмите что бы посмотреть

Презентация на тему An efficient probabilistic context-free. Parsing algorithm that computes prefix probabilities

Содержание

- 2. OverviewWhat is this paper all about?Key ideas from the title:Context-Free ParsingProbabilisticComputes Prefix ProbabilitiesEfficient

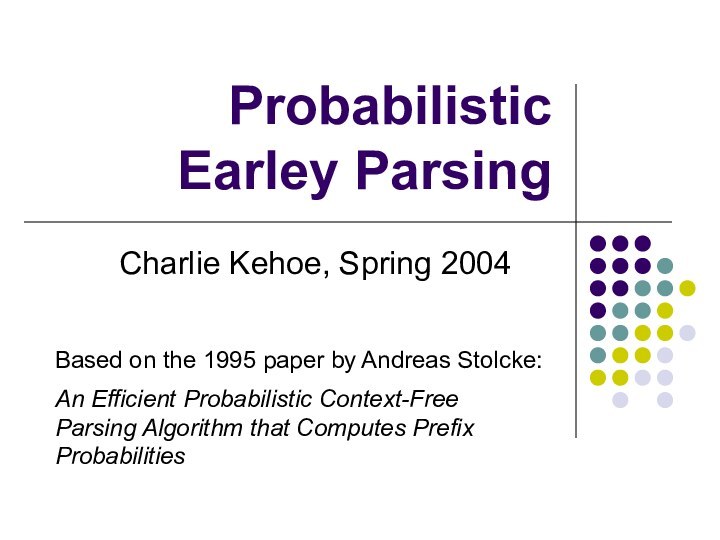

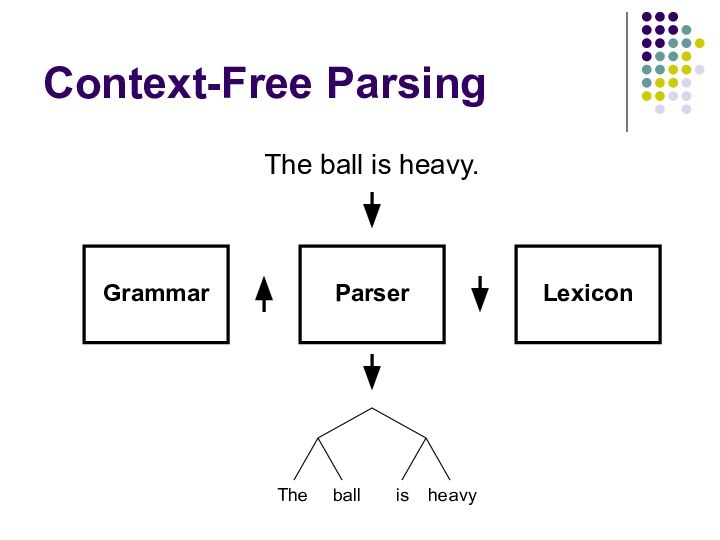

- 3. Context-Free ParsingThe ball is heavy.ParserThe ball is heavy

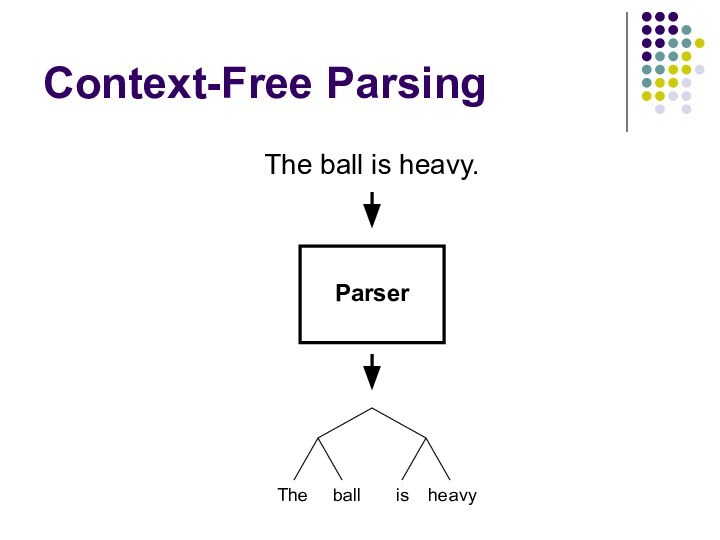

- 4. Context-Free ParsingParserGrammarThe ball is heavy.The ball is heavy

- 5. Context-Free ParsingParserGrammarLexiconThe ball is heavy.The ball is heavy

- 6. Context-Free ParsingWhat if there are multiple legal

- 7. Probabilistic ParsingUse probabilities to find the most

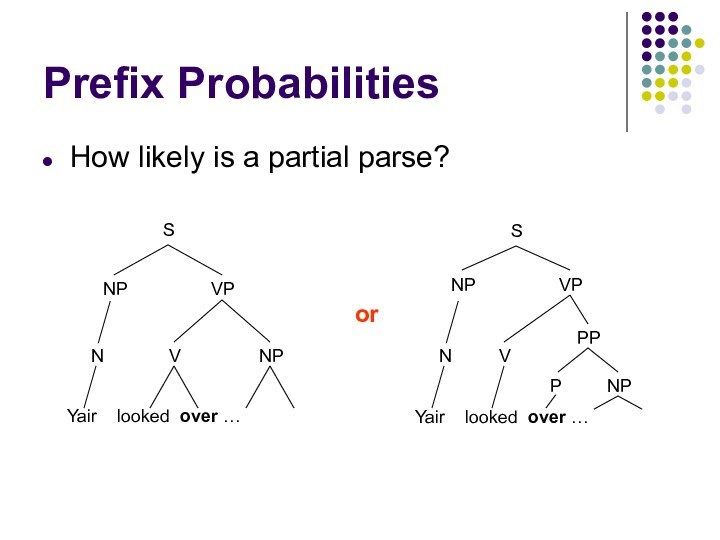

- 8. Prefix ProbabilitiesHow likely is a partial parse?Yair looked over …Yair looked over …SNPVPVNPSNPVPVNPPPPNNor

- 9. EfficiencyThe Earley algorithm (upon which Stolcke builds)

- 10. Parsing AlgorithmsHow do we construct a parse

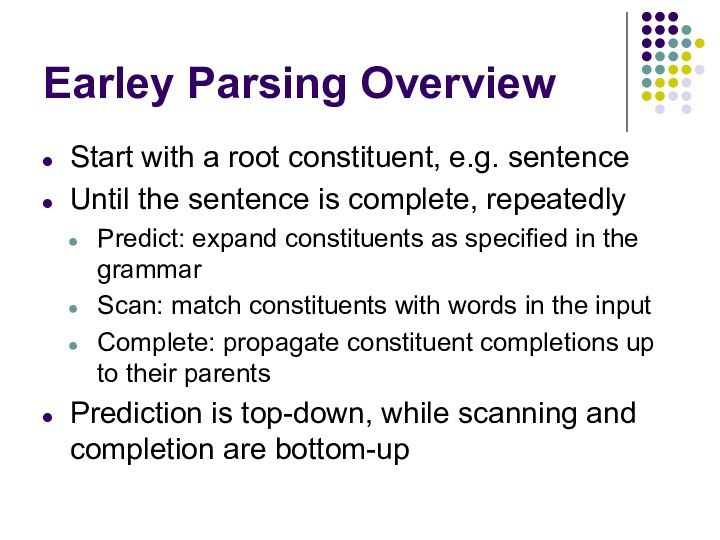

- 11. Earley Parsing OverviewStart with a root constituent,

- 12. Earley Parsing OverviewEarley parsing uses a chart

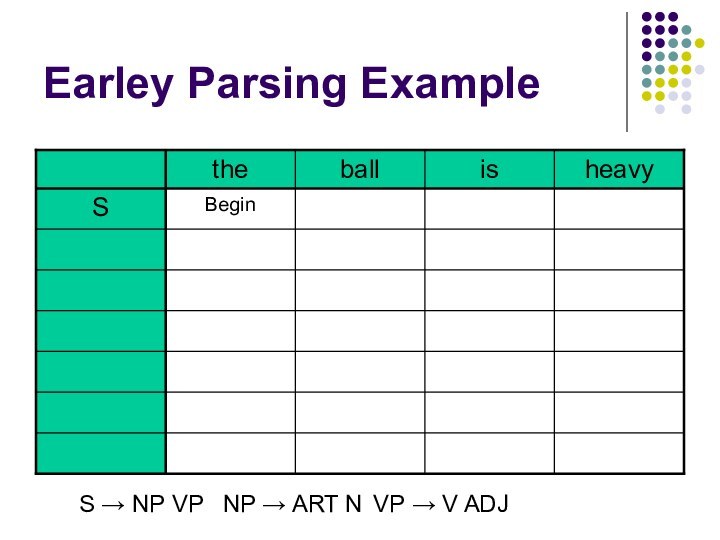

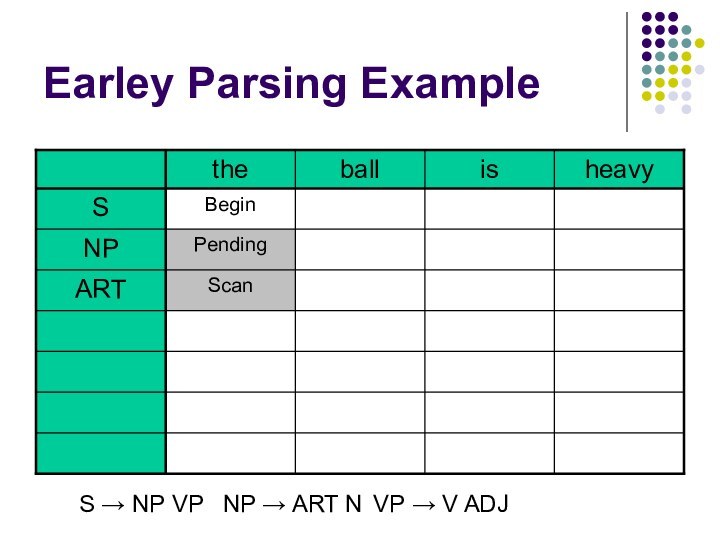

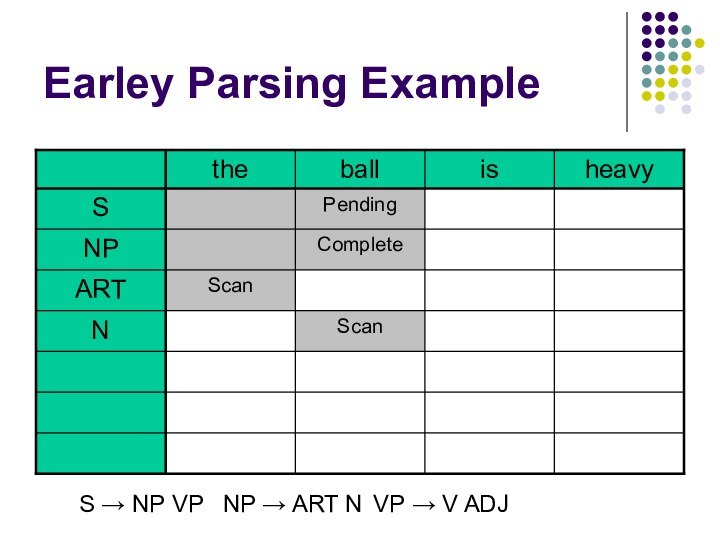

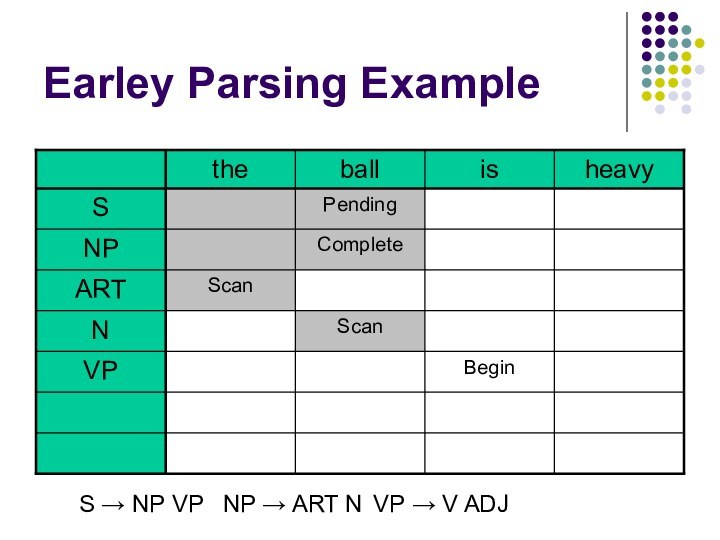

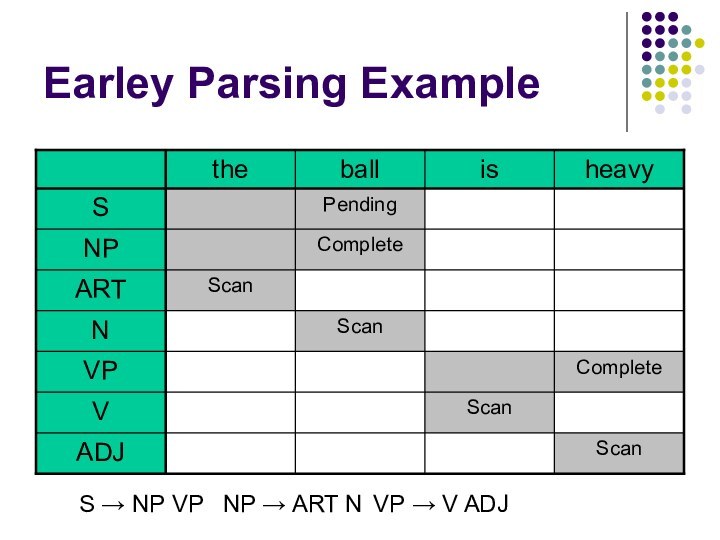

- 13. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 14. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 15. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 16. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 17. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 18. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 19. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 20. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

- 21. Earley Parsing Example S → NP VP NP → ART N VP → V ADJ

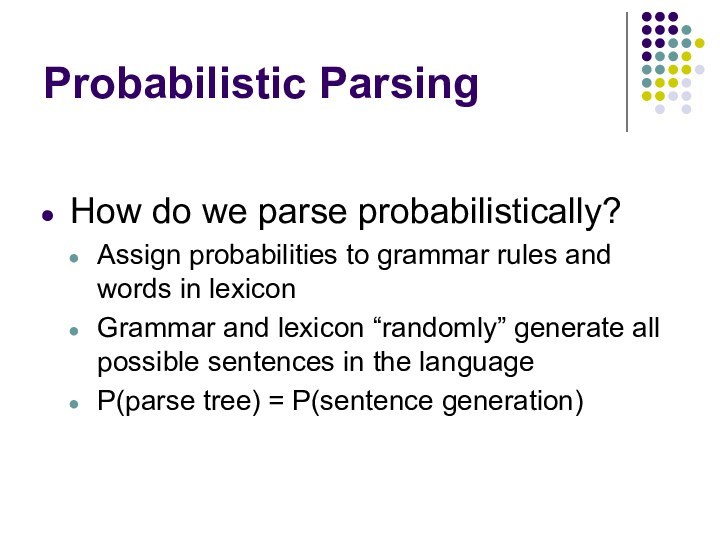

- 22. Probabilistic ParsingHow do we parse probabilistically?Assign probabilities

- 23. Probabilistic ParsingTerminologyEarley state: each step of the

- 24. etc.Probabilistic ParsingCan represent the parse as a

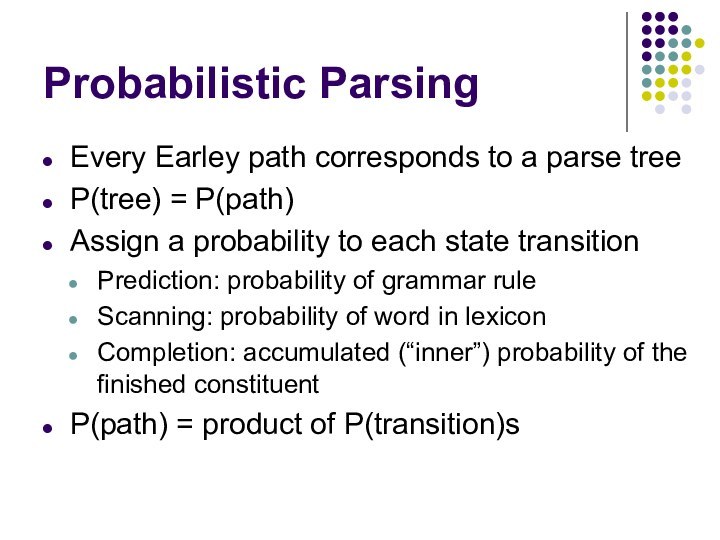

- 25. Probabilistic ParsingEvery Earley path corresponds to a

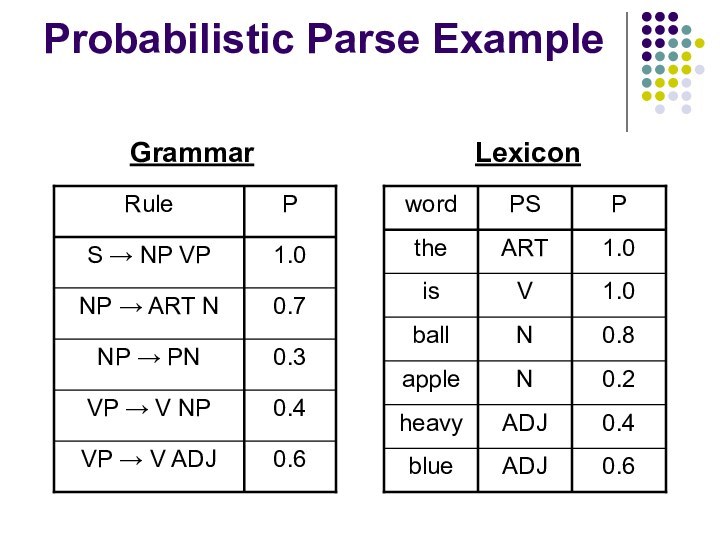

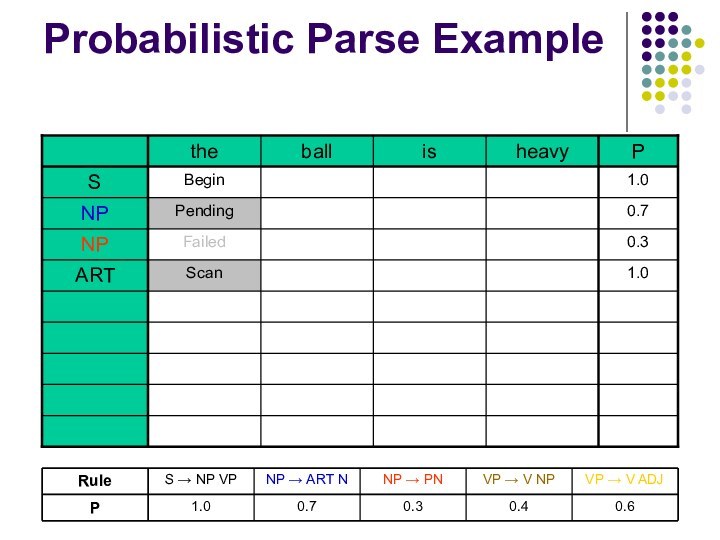

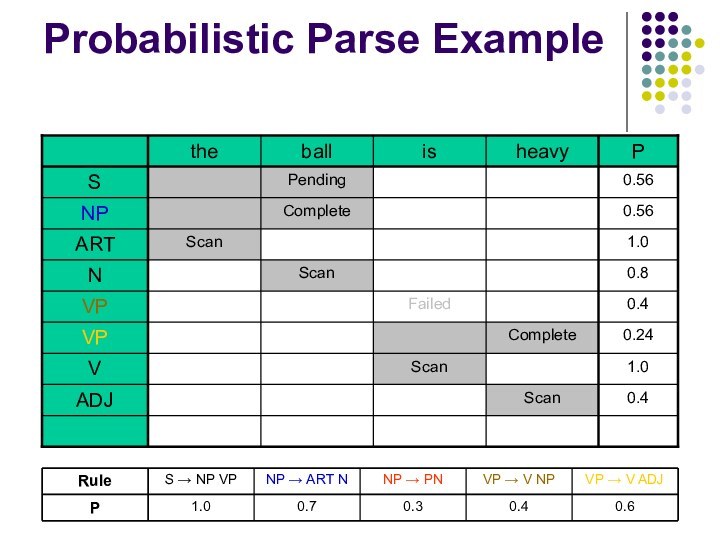

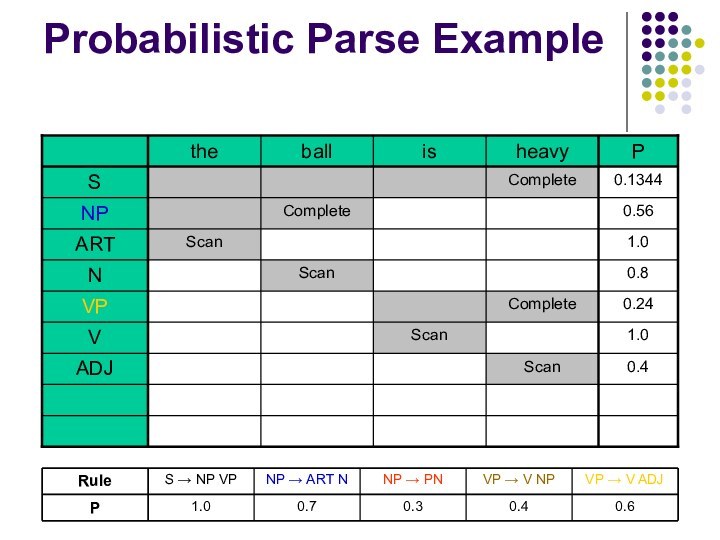

- 26. Probabilistic Parse ExampleGrammarLexicon

- 27. Probabilistic Parse Example

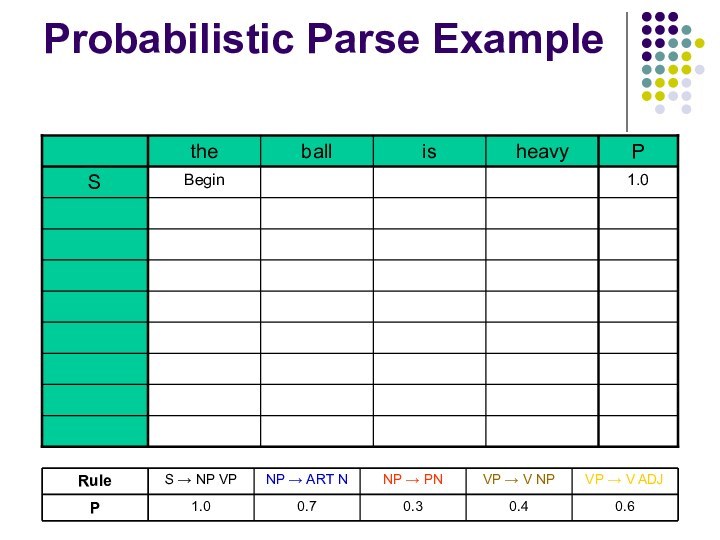

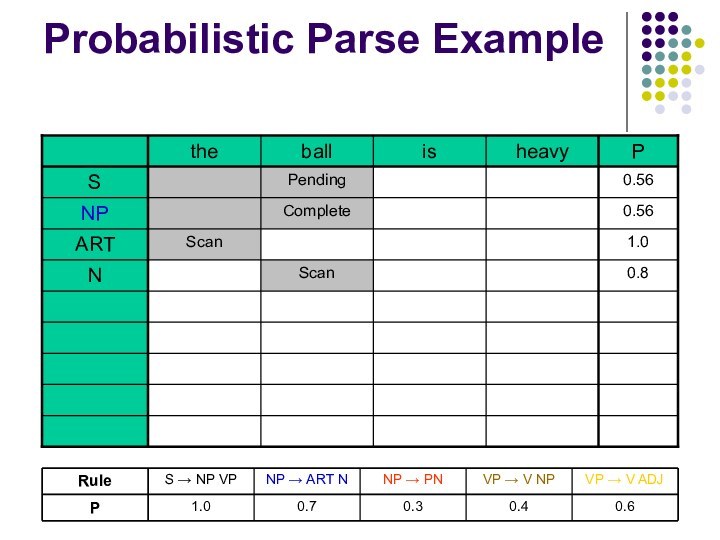

- 28. Probabilistic Parse Example

- 29. Probabilistic Parse Example

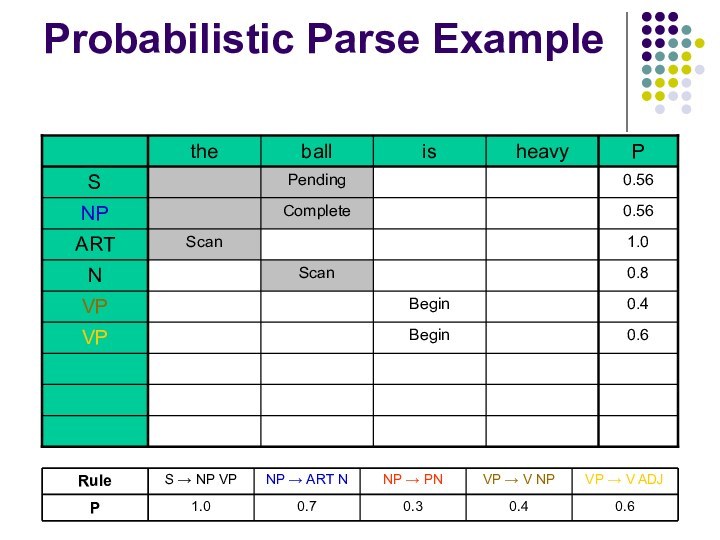

- 30. Probabilistic Parse Example

- 31. Probabilistic Parse Example

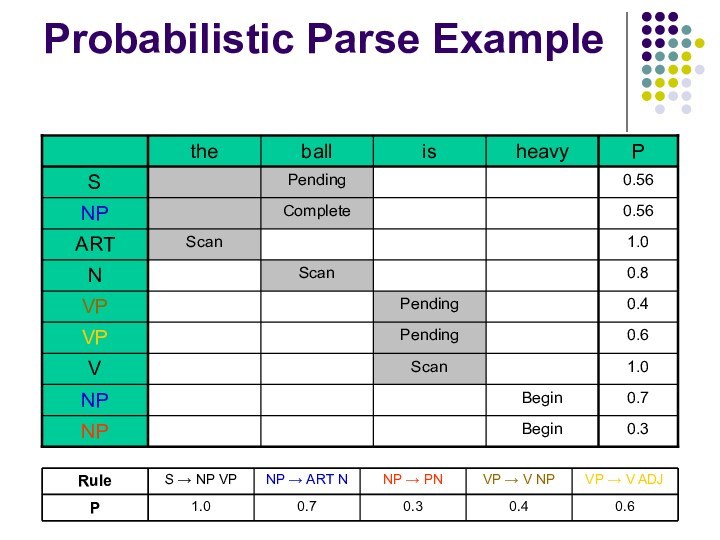

- 32. Probabilistic Parse Example

- 33. Probabilistic Parse Example

- 34. Probabilistic Parse Example

- 35. Probabilistic Parse Example

- 36. Probabilistic Parse Example

- 37. Probabilistic Parse Example

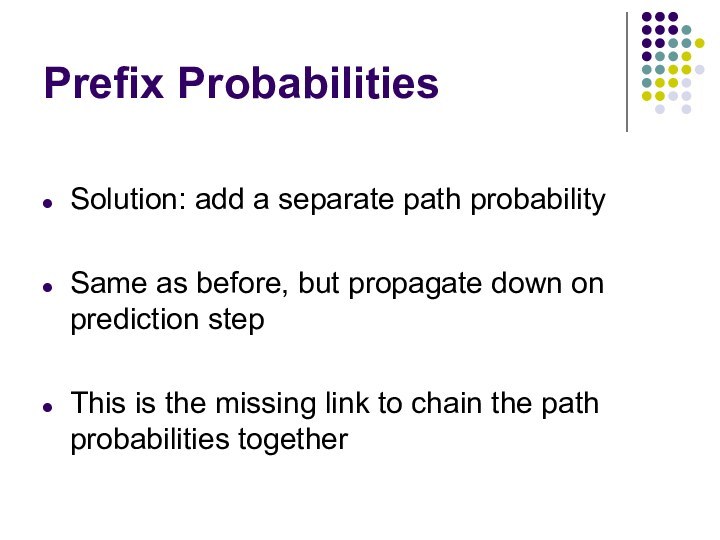

- 38. Prefix ProbabilitiesCurrent algorithm reports parse tree probability

- 39. Prefix ProbabilitiesSolution: add a separate path probabilitySame

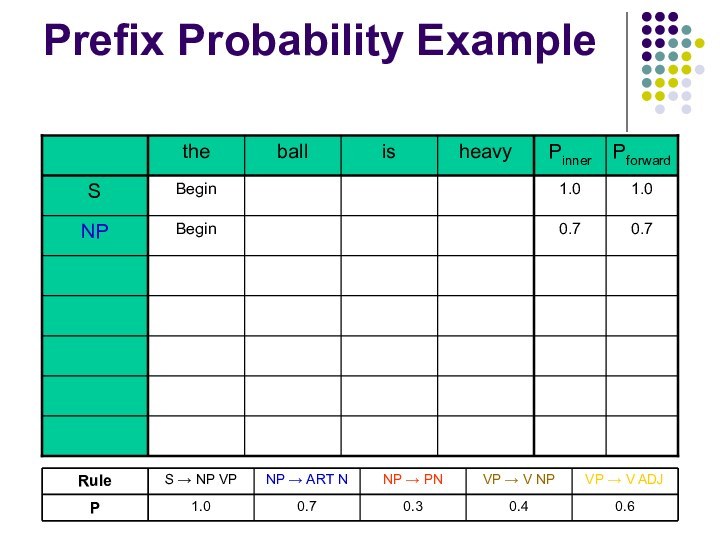

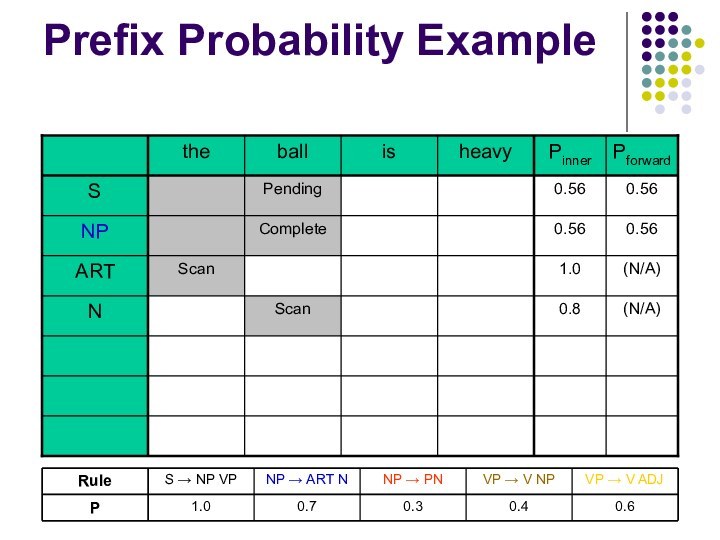

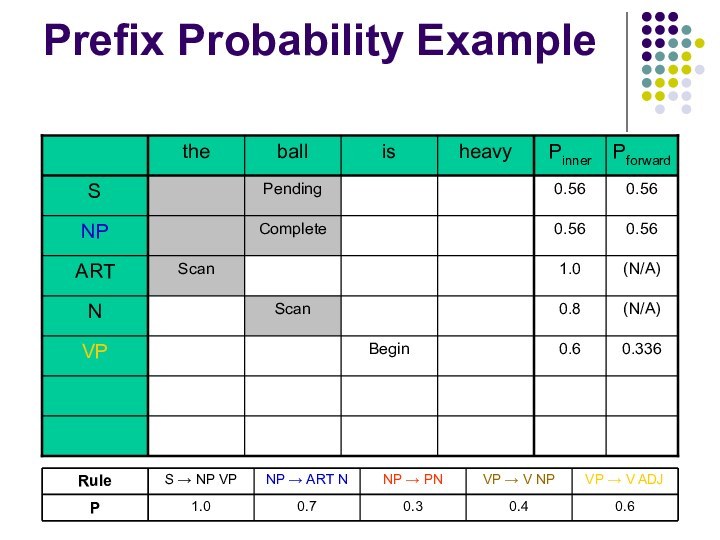

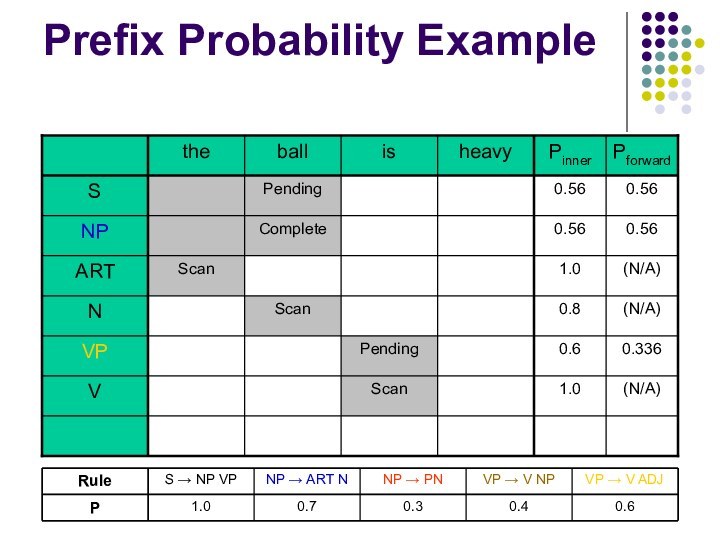

- 40. Prefix Probability Example

- 41. Prefix Probability Example

- 42. Prefix Probability Example

- 43. Prefix Probability Example

- 44. Prefix Probability Example

- 45. Prefix Probability Example

- 46. Prefix Probability Example

- 47. Prefix Probability Example

- 48. Prefix Probability Example

- 49. Скачать презентацию

- 50. Похожие презентации

OverviewWhat is this paper all about?Key ideas from the title:Context-Free ParsingProbabilisticComputes Prefix ProbabilitiesEfficient

Слайд 6

Context-Free Parsing

What if there are multiple legal parses?

Example:

“Yair looked over the paper.”

How does the word “over”

function?Yair looked over the paper

S

NP

VP

V

NP

Yair looked over the paper

S

NP

VP

V

NP

PP

P

N

N

or

Слайд 7

Probabilistic Parsing

Use probabilities to find the most likely

parse

Store typical probabilities for words and rules

In this case:

P

= 0.99P = 0.01

Yair looked over the paper

S

NP

VP

V

NP

Yair looked over the paper

S

NP

VP

V

NP

PP

P

N

N

or

Слайд 8

Prefix Probabilities

How likely is a partial parse?

Yair

looked over …

Yair looked over …

S

NP

VP

V

NP

S

NP

VP

V

NP

PP

P

N

N

or

Слайд 9

Efficiency

The Earley algorithm (upon which Stolcke builds) is

one of the most efficient known parsing algorithms

Probabilities allow

intelligent pruning of the developing parse tree(s)

Слайд 10

Parsing Algorithms

How do we construct a parse tree?

Work

from grammar to sentence (top-down)

Work from sentence to grammar

(bottom-up)Work from both ends at once (Earley)

Predictably, Earley works best

Слайд 11

Earley Parsing Overview

Start with a root constituent, e.g.

sentence

Until the sentence is complete, repeatedly

Predict: expand constituents as

specified in the grammarScan: match constituents with words in the input

Complete: propagate constituent completions up to their parents

Prediction is top-down, while scanning and completion are bottom-up

Слайд 12

Earley Parsing Overview

Earley parsing uses a chart rather

than a tree to develop the parse

Constituents are stored

independently, indexed by word positions in the sentenceWhy do this?

Eliminate recalculation when tree branches are abandoned and later rebuilt

Concisely represent multiple parses

Слайд 22

Probabilistic Parsing

How do we parse probabilistically?

Assign probabilities to

grammar rules and words in lexicon

Grammar and lexicon “randomly”

generate all possible sentences in the languageP(parse tree) = P(sentence generation)

Слайд 23

Probabilistic Parsing

Terminology

Earley state: each step of the processing

that a constituent undergoes. Examples:

Starting sentence

Half-finished sentence

Complete sentence

Half-finished noun

phraseetc.

Earley path: a sequence of linked states

Example: the complete parse just described

Слайд 24

etc.

Probabilistic Parsing

Can represent the parse as a Markov

chain:

Markov assumption (state probability is independent of path) applies,

due to CFGS ►

NP VP

Begin

S

Begin

NP ►

ART N Begin

NP ►

PN Begin

NP Half Done

NP Done

S Half Done

(path abandoned)

Predict S

Predict NP

Scan “the”

Scan “ball”

Complete NP

Слайд 25

Probabilistic Parsing

Every Earley path corresponds to a parse

tree

P(tree) = P(path)

Assign a probability to each state transition

Prediction:

probability of grammar ruleScanning: probability of word in lexicon

Completion: accumulated (“inner”) probability of the finished constituent

P(path) = product of P(transition)s

Слайд 38

Prefix Probabilities

Current algorithm reports parse tree probability when

the sentence is completed

What if we don’t have a

full sentence?Probability is tracked by constituent (“inner”), rather than by path (“forward”)